Machine Learning Methods for Analyzing Multisensory Integration with Magnetoencephalography

Date:

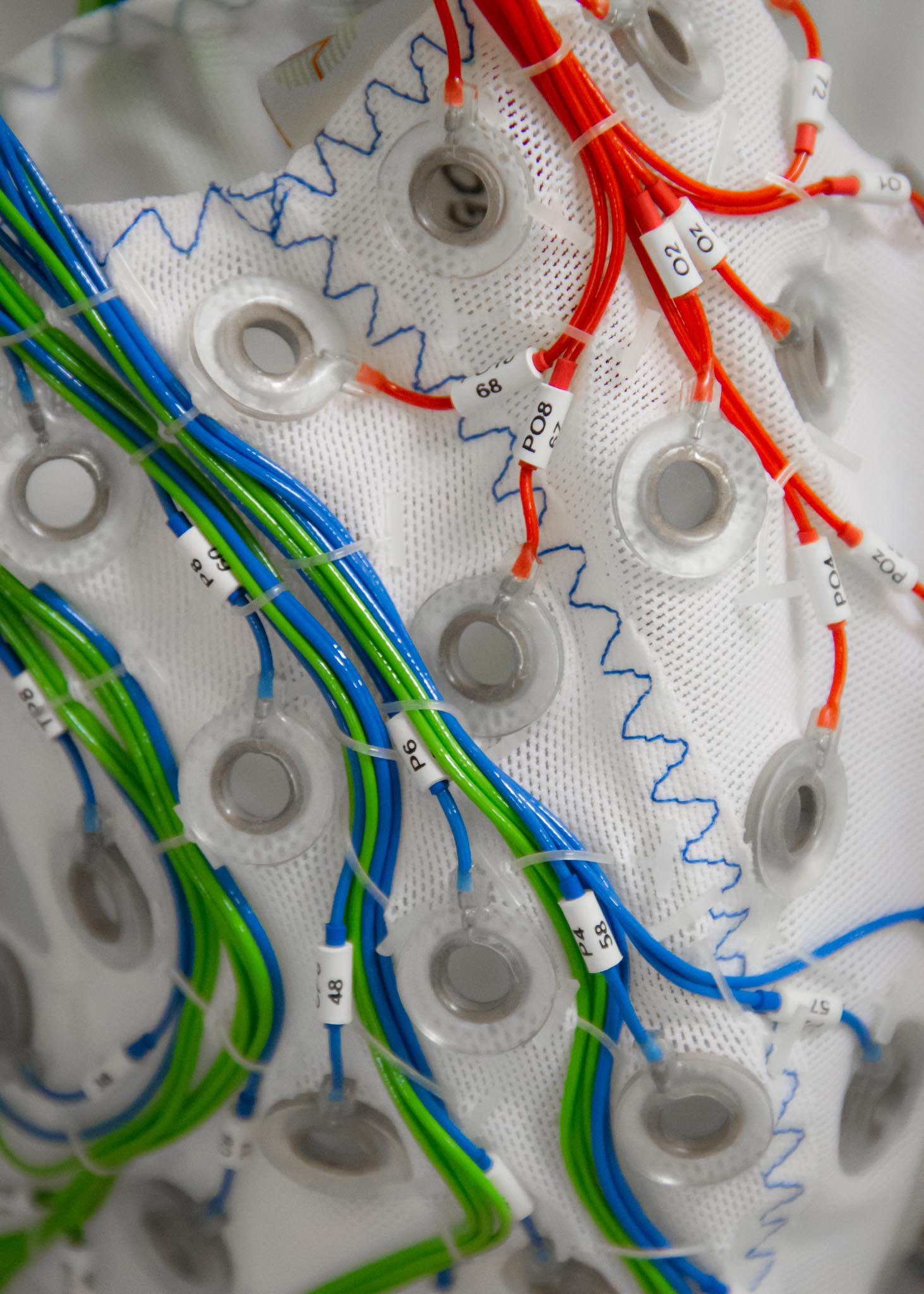

I delivered an oral presentation at the Magnetoencephalography Laboratory of the McGovern Institute for Brain Research at MIT, summarizing the results of my research internship under the supervision of Dr Dimitrios Pantazis. The project focused on developing machine learning methods to process magnetoencephalography data and on understanding how the human brain binds visual and auditory information into a unified percept as part of a National Science Foundation supported effort.

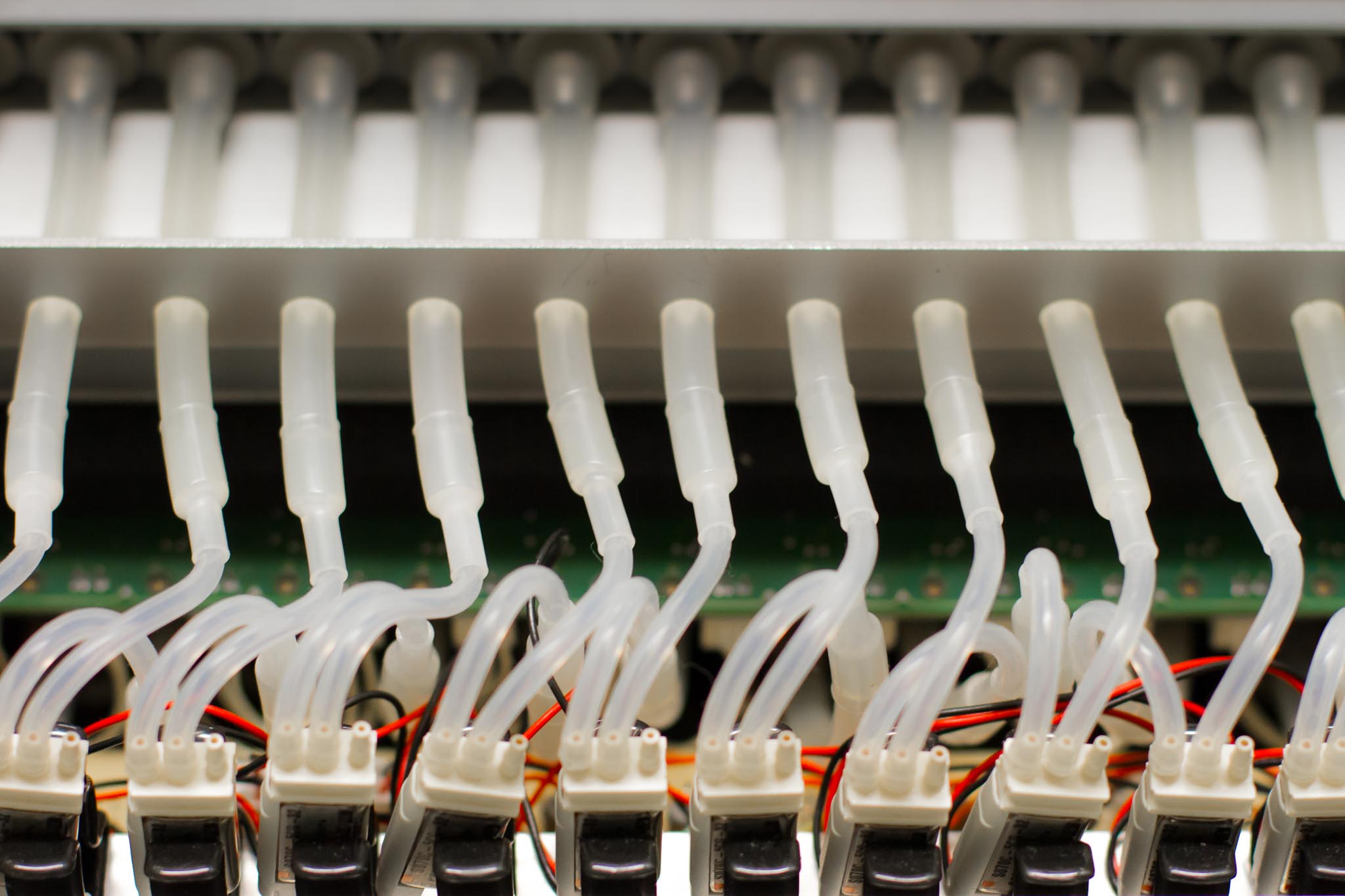

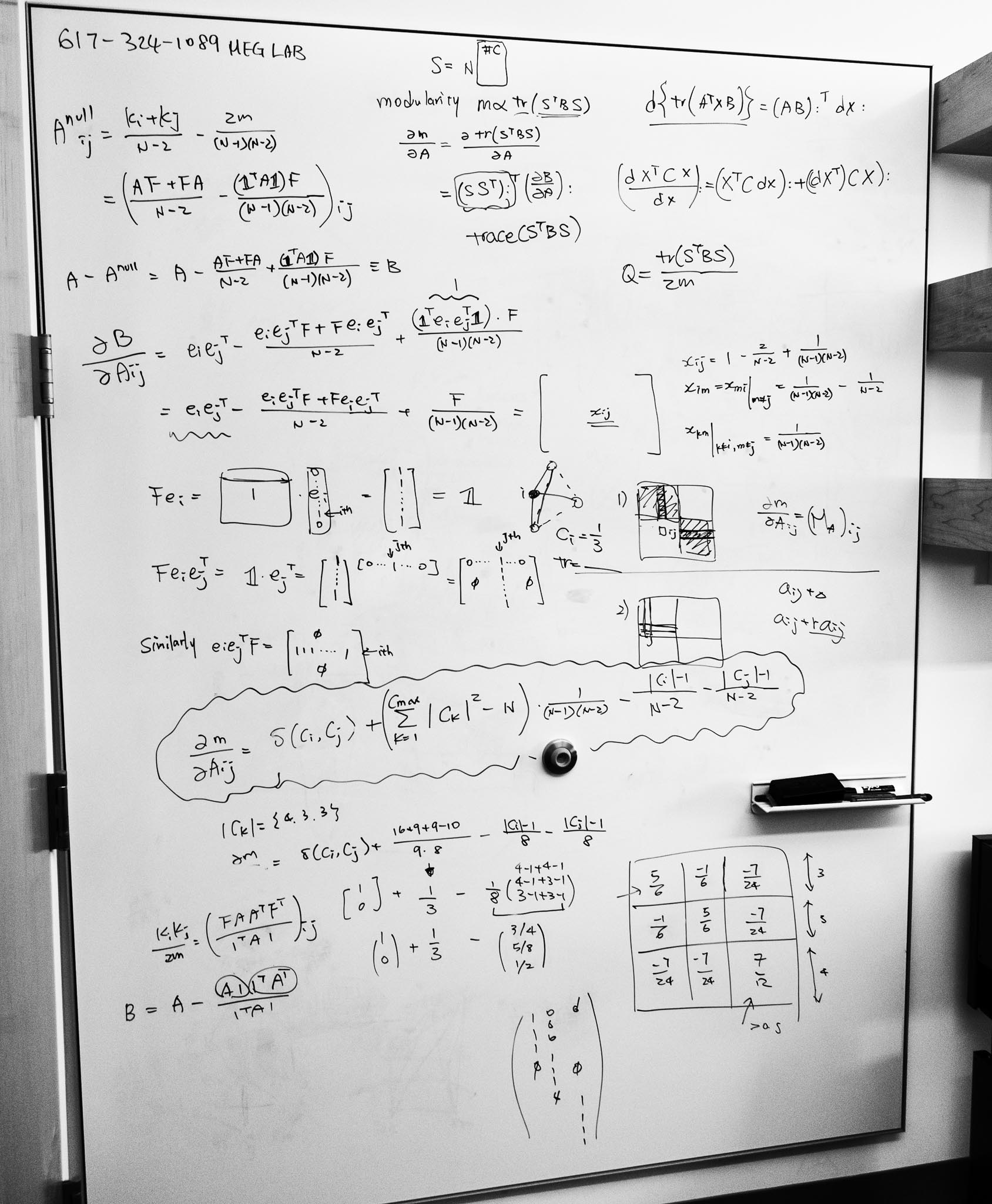

During the internship, I designed and implemented a processing pipeline for magnetoencephalography (MEG) data to study multisensory integration and its temporal dynamics. This work involved preprocessing MEG recordings, extracting time resolved spatial patterns, and applying a variety of machine learning techniques to identify brain signals associated with the alignment, conflict or separation of visual and auditory stimuli. I focused on how temporal shifts between sensory channels affect the neural signatures of binding, and how alterations in timing can disrupt the integration process.

In the presentation, I discussed the architecture of the MEG analysis pipeline, results from multivariate classification experiments, and the early patterns we observed in response to temporally congruent versus incongruent audiovisual stimuli. I also described how these findings relate to broader questions about temporal processing, cortical organization and the computational principles underlying multisensory perception.